Micron Technology (MU) shares surged in early Monday trading as analysts continue to recognize the significant value offered by the creators of a pivotal component crucial for the advancement of AI technologies, which could potentially yield billions in value over the forthcoming years.

Micron, engaged in a fierce battle for market share in the memory-chip sector against formidable Asian competitors like SK Hynix and Samsung, is swiftly making inroads into the AI domain with a novel semiconductor engineered to support generative AI applications.

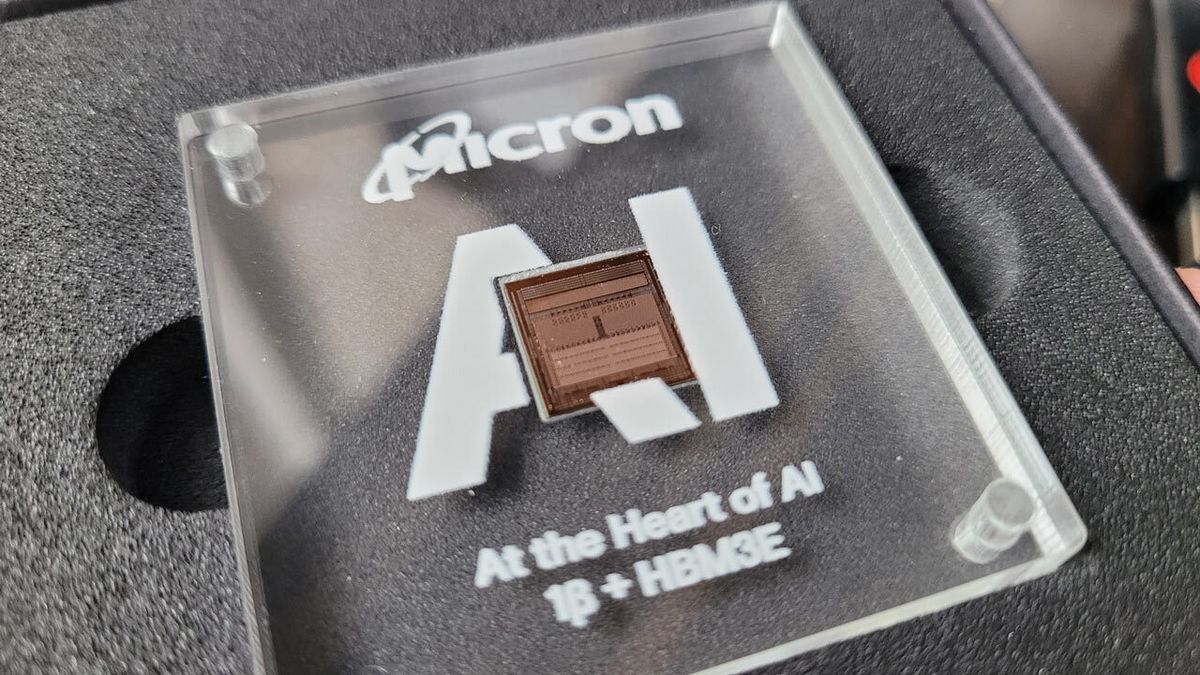

High-bandwidth-memory chips, commonly referred to as HBM, are specifically crafted to be integrated into larger artificial intelligence semiconductors, such as those manufactured by industry leader Nvidia (NVDA), to enhance performance and mitigate power consumption.

“We are poised to generate several hundred million dollars of revenue from HBM in fiscal 2024 and anticipate HBM revenues to contribute positively to our DRAM and overall gross margins starting in the fiscal third quarter,” stated CEO Sanjay Mehrotra during a recent investor briefing.

“With HBM demand already exceeding our supply for calendar year 2024, and a significant portion of our 2025 supply already accounted for, we are experiencing robust demand,” he added.

This soaring demand not only facilitated Micron in surpassing Wall Street’s expectations with a surprise fiscal-second-quarter profit of 42 cents per share, substantially outperforming the forecasted loss of 25 cents but also prompted a bullish forecast for current-quarter revenue, estimated to hover around $6.6 billion.

Moreover, Micron anticipates welcoming new clients as the augmented capacity of HBM enables them to “integrate more memory per GPU, thereby facilitating more robust AI training and inference solutions.”

Bank of America Securities analyst Vivek Arya shares this bullish sentiment, foreseeing that the escalating demand for HBM will propel the overall market size to approximately $20 billion by 2027, with Micron’s market share expanding from the current 5% to around 20%.

Leave a Reply