For the past week, I’ve been experimenting with OpenAI’s new Advanced Voice Mode (AVM), and it offers a compelling glimpse into a potential AI-powered future. This feature, which is still in a limited alpha test, allows me to interact with my phone using only my voice.

It responded with laughter, and jokes, and even shared that it was having “a great time,” creating a more engaging and hands-free experience compared to traditional voice commands.

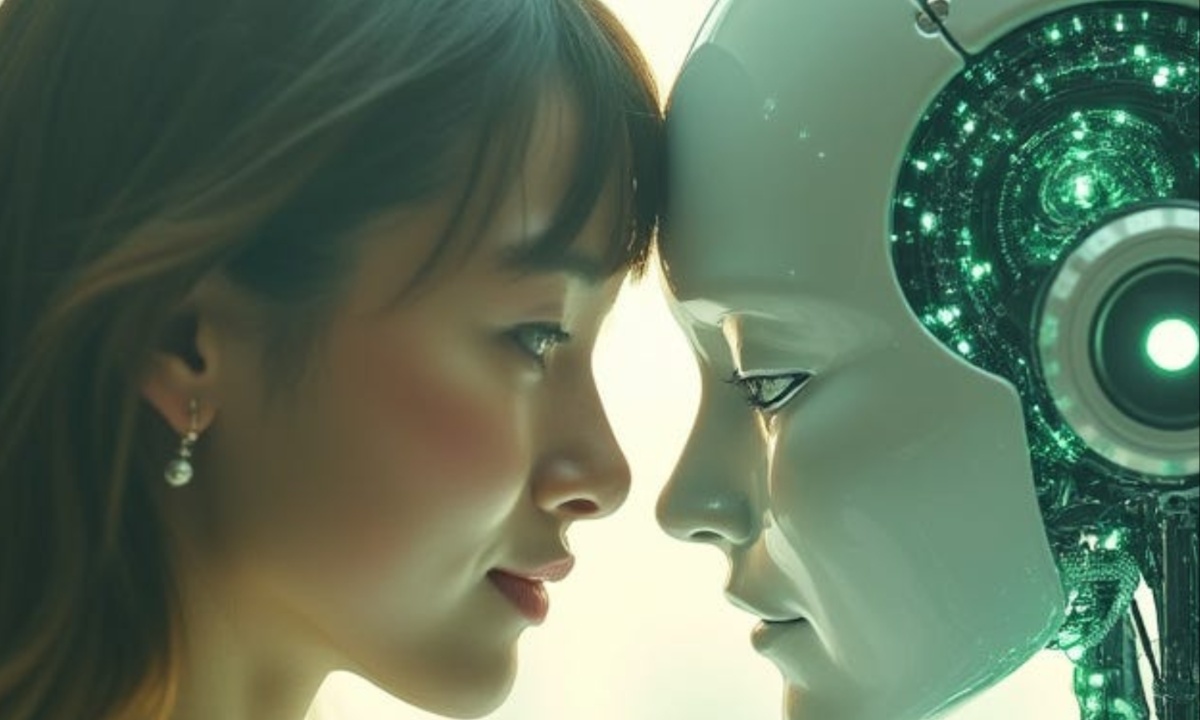

AVM doesn’t make ChatGPT more intelligent, but it does make interactions feel more natural and friendly. The feature introduces a novel way of interfacing with AI and devices, which is both exciting and a bit unsettling. Despite some glitches, I found myself enjoying the experience, though the concept of such a responsive and seemingly personable AI is somewhat eerie.

This new feature aligns with OpenAI CEO Sam Altman’s vision of transforming human-computer interactions. Altman has discussed the concept of AI agents that can handle various tasks simply by being asked, which he believes will have substantial benefits. AVM represents a step toward this vision, showcasing a more dynamic and interactive form of AI assistance.

In a fun test, I asked AVM to order Taco Bell in the style of Obama. The resulting response, complete with a humorous imitation, was surprisingly entertaining and showed AVM’s ability to mimic tones and cadence. This level of interaction demonstrated AVM’s potential to bring a sense of joy and personality to conversations with AI.

However, while AVM excels at engaging in conversations and understanding complex topics, it falls short in some practical areas. It currently cannot perform tasks like setting timers or checking the weather, and its ability to interact with other APIs is limited. Compared to Google’s Gemini Live, AVM has its strengths in emotional expression and speed, but both technologies suffer from glitches and technical issues.

The development of AVM raises concerns about the nature of human-AI interactions. The trend of using AI for companionship and advice mirrors social media’s role in creating artificial connections.

As AI chatbots become more integrated into our lives, there’s a risk they could become addictive and exploit our social needs. Despite these concerns, innovations like AVM suggest that more advanced and personalized AI interactions could become commonplace in the near future.

Leave a Reply